Machine learning has transformed how organizations build products, make decisions, and automate complex tasks. Yet, behind every successful model lies a carefully designed workflow that turns raw data into reliable predictions. Implementing a machine learning workflow from scratch can seem overwhelming at first, but with a structured approach, it becomes a manageable and even rewarding engineering process. This article walks through each step in detail, offering practical guidance for building a robust system from the ground up.

TLDR: A successful machine learning workflow starts with clearly defining the problem and collecting high-quality data. It continues through data preprocessing, feature engineering, model selection, evaluation, and deployment. Each phase requires thoughtful decisions, experimentation, and documentation. Treat the workflow as an iterative cycle rather than a one-time task to achieve long-term success.

1. Define the Problem Clearly

Every machine learning project begins with a well-defined objective. Without clarity, even the most advanced algorithms will fail to deliver meaningful value. Ask yourself:

- What problem am I solving?

- Is it a classification, regression, clustering, or ranking task?

- What does success look like?

- How will the model’s predictions be used in practice?

Translate business goals into measurable machine learning objectives. For example, instead of saying “improve customer experience,” define it as “predict customer churn with at least 85% recall.” This alignment ensures your technical solution maps directly to real-world value.

At this stage, it is also important to establish constraints such as latency requirements, interpretability needs, and budget limitations. These factors heavily influence architectural and model choices later on.

2. Collect and Understand the Data

Data is the foundation of any machine learning system. Before model building begins, gather the relevant datasets from databases, APIs, logs, sensors, or third-party providers.

Once collected, perform exploratory data analysis (EDA) to understand patterns, distributions, missing values, and anomalies. Some practical steps include:

- Viewing summary statistics (mean, median, standard deviation)

- Checking class balance in classification problems

- Visualizing distributions with histograms and box plots

- Identifying correlations between variables

This exploration often reveals potential issues such as biased sampling, inconsistent formatting, or data leakage. Fixing these early prevents costly problems later in the workflow.

3. Clean and Preprocess the Data

Raw data is rarely ready for model training. Preprocessing transforms messy inputs into structured formats suitable for algorithms.

Common preprocessing steps include:

- Handling missing values: Remove rows, impute with mean or median, or use predictive imputation.

- Encoding categorical variables: Apply one hot encoding or label encoding.

- Scaling numerical features: Normalize or standardize values for algorithms sensitive to scale.

- Removing duplicates: Ensure data integrity.

- Splitting the dataset: Create training, validation, and test sets.

A typical split might allocate 70% for training, 15% for validation, and 15% for testing. The test set should remain untouched until final evaluation to provide an unbiased measure of performance.

Automation is highly recommended. Use reproducible scripts or pipelines to ensure preprocessing can be repeated consistently in production.

4. Perform Feature Engineering

Feature engineering is both an art and a science. Well-crafted features often matter more than complex algorithms.

This step may involve:

- Creating interaction terms between variables

- Extracting date components such as day of week or month

- Aggregating transactional data

- Extracting embeddings from text or images

Domain knowledge plays a major role here. For instance, in a fraud detection system, the frequency of transactions within a short timeframe might be more predictive than total transaction amount.

Carefully track feature transformations. Document how each feature is derived so the same logic can be applied during inference. Inconsistent feature generation between training and production is a common cause of failure.

5. Select and Train a Model

With clean and engineered data ready, you can begin model selection. The choice depends on the problem type, dataset size, and interpretability requirements.

Some common model families include:

- Linear models: Logistic regression, linear regression

- Tree based models: Decision trees, random forest, gradient boosting

- Neural networks: Deep learning architectures for complex patterns

- Clustering algorithms: K means, hierarchical clustering

Start simple. Often, a baseline logistic regression or decision tree provides surprisingly strong performance. Establishing a baseline gives you a benchmark for improvement.

Train the model using the training set and evaluate on the validation set. Use techniques like cross validation to get more stable performance estimates. Adjust hyperparameters through grid search or randomized search to find optimal settings.

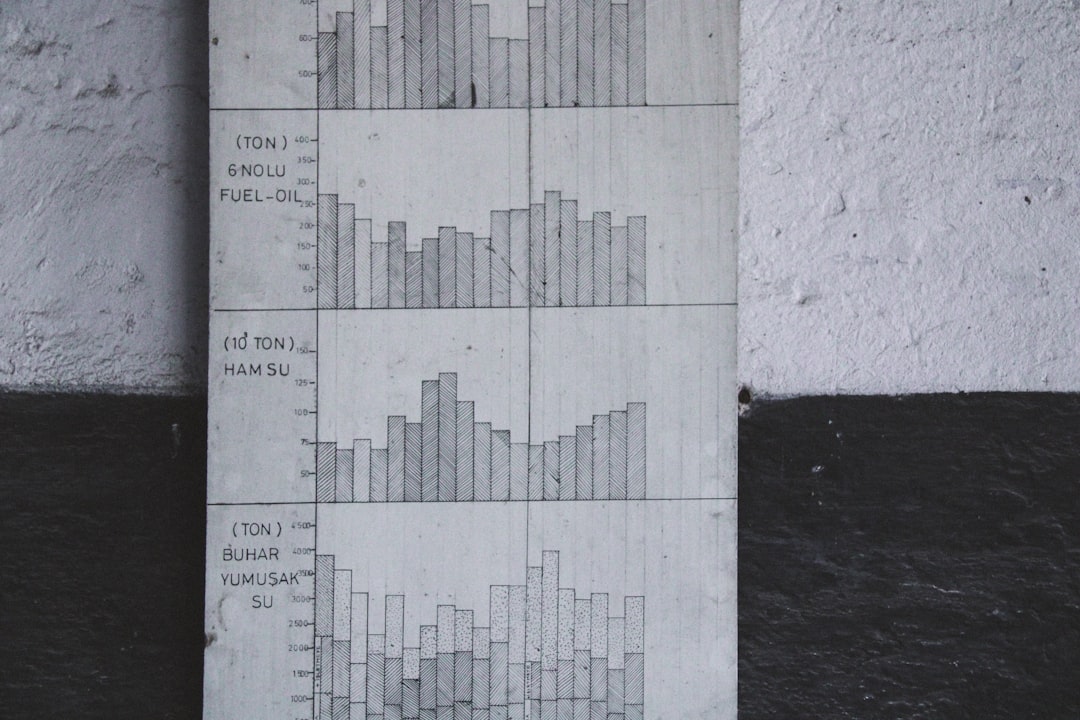

6. Evaluate Model Performance

Evaluation goes beyond checking accuracy. Different problems require different metrics:

- Classification: Precision, recall, F1 score, ROC AUC

- Regression: Mean squared error, mean absolute error, R squared

- Ranking: Mean average precision, NDCG

Choose metrics aligned with business goals. In medical diagnosis, recall may be more important than precision, as missing a positive case could be harmful. In spam detection, precision might take priority.

Analyze model errors carefully. Examine false positives and false negatives to discover systematic weaknesses. Sometimes small feature adjustments can significantly improve performance.

Finally, evaluate the model on the untouched test dataset to estimate real-world performance.

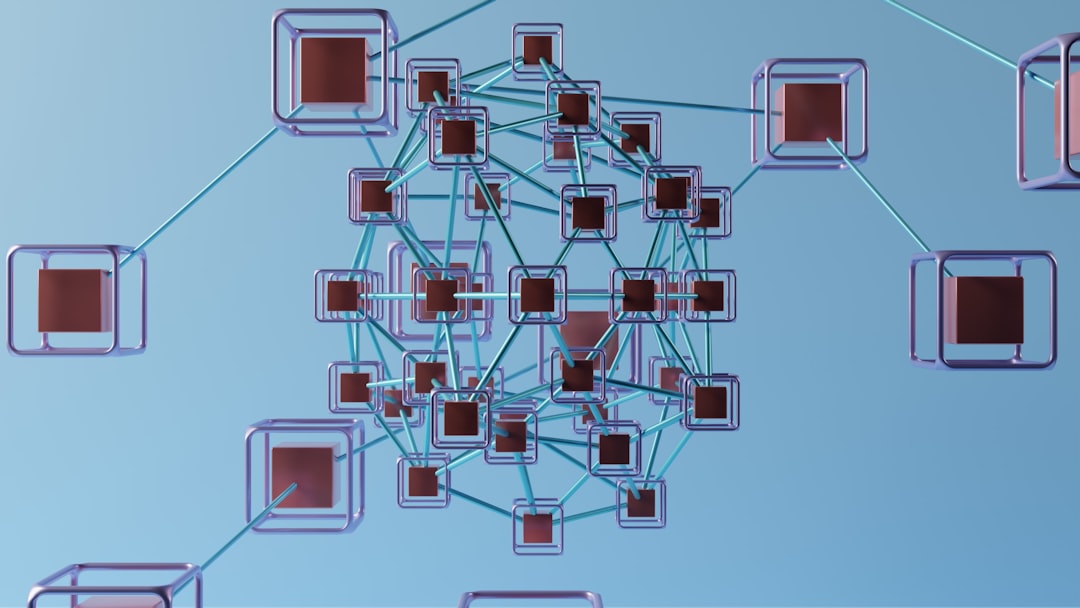

7. Deploy the Model

A trained model delivers value only when integrated into a production environment. Deployment options depend on your application:

- Expose the model through a REST API

- Embed it into a batch processing pipeline

- Integrate it directly into a web or mobile application

Containerization tools like Docker help ensure consistent runtime environments. Orchestration platforms can handle scaling and monitoring automatically.

Important deployment considerations include:

- Latency: Can predictions be generated quickly enough?

- Scalability: Can the system handle peak load?

- Security: Are data and predictions protected?

- Monitoring: Are performance metrics tracked continuously?

8. Monitor and Maintain the System

Machine learning systems degrade over time due to data drift and changing patterns. Monitoring ensures your model maintains performance after deployment.

Track:

- Prediction distributions

- Input data changes

- Accuracy over time

- System latency and failures

If performance drops, retrain the model with more recent data. Establish automated retraining pipelines when appropriate.

Documentation and logging are critical. Record model versions, training data snapshots, and hyperparameters. This guarantees reproducibility and smooth debugging.

9. Iterate and Improve

Machine learning is not a linear process. It is iterative. Insights gained during evaluation often send you back to feature engineering or data collection.

Continuous improvement might involve:

- Collecting additional data

- Refining features

- Trying more advanced algorithms

- Optimizing hyperparameters further

Each iteration strengthens the pipeline and deepens your understanding of the problem domain.

Best Practices for a Robust Workflow

To build a dependable machine learning workflow from scratch, follow these guiding principles:

- Reproducibility first: Use version control and document experiments.

- Start simple: Build a baseline before increasing complexity.

- Automate: Implement pipelines for preprocessing and training.

- Think end to end: Plan for deployment early, not as an afterthought.

- Collaborate: Work closely with domain experts and stakeholders.

By treating machine learning not as a single model but as a comprehensive workflow, you create systems that are scalable, maintainable, and impactful.

Conclusion

Implementing a machine learning workflow from scratch involves far more than writing a few lines of code. It requires thoughtful problem definition, rigorous data handling, strategic modeling, and ongoing maintenance. Each step builds upon the previous one, forming a structured pipeline that transforms raw information into actionable insight.

Approach the task methodically, embrace iteration, and prioritize clarity and reproducibility. With the right workflow in place, you will not only build accurate models but also create machine learning systems that stand the test of time.